Status: Rough working notes – me thinking aloud. Speculative, and likely contains errors, misconceptions, and omissions; thoughtful, informed comments are welcome. Intended for a general scientifically curious audience, not requiring much detailed specialist background.

It is sometimes said physics is nearing its end – closing in on a “theory of everything” – because we understand so much about the basic rules governing the particles and forces in our universe. This is like asserting that computer science ended in 1936 with the Turing machine, computing's “theory of everything”. Of course, that's not what happened. Rather, the invention of the Turing machine opened a new creative frontier, enabling an extraordinary profusion of new ideas.

Science is going through a similarly exciting transition today. Until recently humanity worked with matter much like we did computing prior to Turing, using a patchwork of bespoke tools and ideas. But now we understand the fundamentals of how matter works: we have a fantastic theory describing the elementary particles and forces, and we're getting increasingly good at manipulating matter. And so we're beginning to ask: what can we build, in principle? Not just in a practical sense, with the tools that happen to be at hand, but in a fundamental sense: what is allowed by the laws of physics?

Of course, this implies a whole world of questions: What qualities are possible in matter? Can we do for matter what Turing and company did for computation? Can we invent new high-level abstractions and design principles for matter, similar to what people like John McCarthy and Alan Kay did for computing? What design space is opened up when we control matter as well as we control pixels on a computer screen? What beautiful new design ideas are possible?

Most of the matter in our everyday experience is constructed from just three particles: protons, electrons, and neutrons. These can be used to build an astonishing array of different things: diamonds; human beings; galaxies; black holes; iPhones. With sufficiently good tweezers and a lot of patience you could reassemble a human being into a bicycle of comparable mass; and vice versa.

So, what else can we build from protons, electrons, and neutrons** Martin Rees has pointed out that we humans tend to engage in particle chauvinism, often assuming that what we think of as “ordinary matter” is all there is to matter. Indeed, I've engaged in just such a chauvinism in asserting above that “we understand the fundamentals of how matter works”. However, based on what we currently suspect about dark matter, so-called “ordinary matter” may actually be rather unusual, the exception rather than the rule. Nonetheless, in the absence of a good understanding of dark matter I shall continue to engage in particle chauvinism, and restrict myself in this essay to ordinary matter. Indeed, I shall go further and restrict the discussion (mostly) to protons, electrons, and neutrons. ?

It seems likely we can build things that would astonish us, things going radically beyond anything we can even conceive of today, much less build.

Consider that ten million years ago conscious minds were absent from the earth, except in quite primitive forms. Might there be forms of matter possible which are as different from anything familiar to us today as consciousness is from a rock** Some people don't believe consciousness is a property of matter. There are limited senses in which that is reasonable: certainly, matter is a substrate, and aspects of consciousness may be independent of the details of that substrate, in a matter analogous to the way an algorithm has an existence independent of the computer it runs on. Nonetheless, I don't think there is much serious reason to doubt that consciousness is a property that emerges out of ordinary matter.?

What might those forms be?

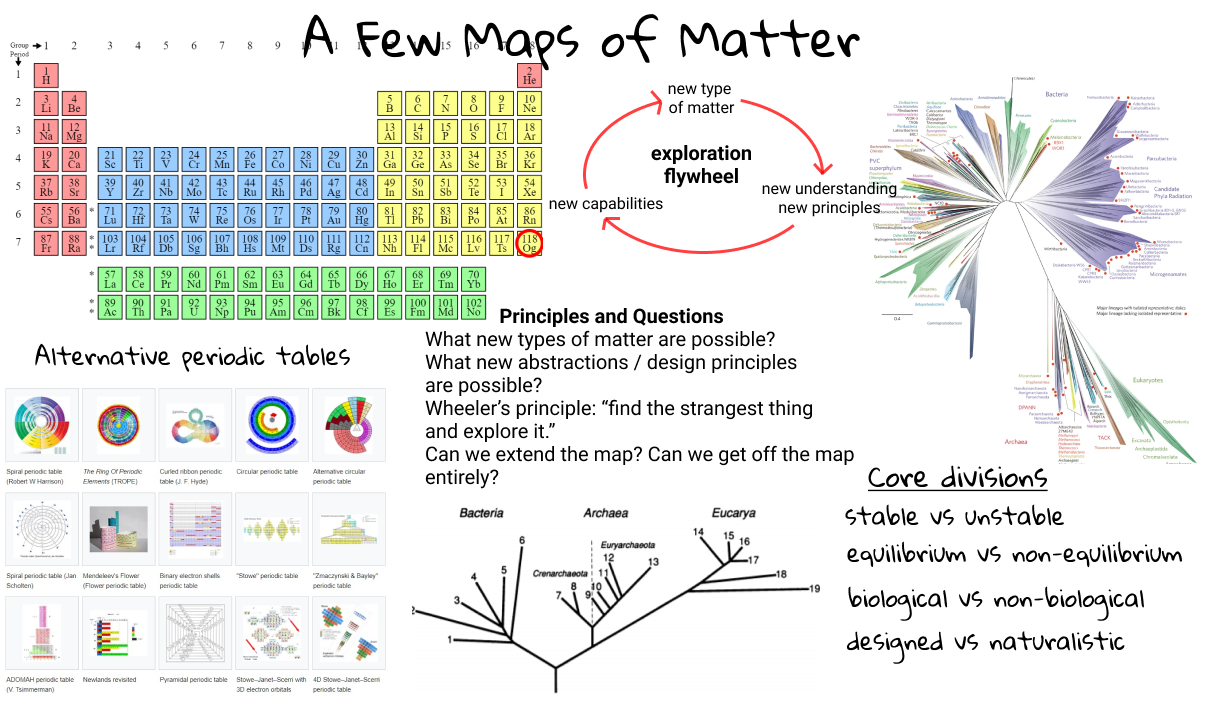

One way to explore the “what can we build?” question is to look at different maps of matter. Probably the best known such map is the periodic table, showing all the different types of atoms which make up most of the matter in the world around us. But there's many other maps of matter. There's phylogenetic trees, which show the relationship between different types of life. There's maps of the different types of stars, of the different phases of matter, of all the proteins we've found. And many more.

In these notes we'll go on safari through several such maps of matter. We won't go too deep into any individual map – everything I discuss could be (often has been) the subject of PhD theses, or even an entire field. Instead, we're going to look rapidly at several different ways we explore matter. The purpose is to discern some general principles that can help us explore in generative ways.

When I began writing these notes I had a whimsical question in mind: is there a single, unified map we can use to understand all of matter? I hoped the attempt to make such a unified map would give me some insight into the question “what can we build, in principle?” And so, as I wrote the notes I toyed with multiple ways of making a unified map. Eventually, I realized matter is too rich and variegated for a really good unified map to be possible. What's more, to do even a passing job these notes would need to be a hundred times longer! Nonetheless, I found the attempt stimulating. And so at the end of the notes I show a single diagram which encapsulates many of the ideas for exploration developed in these notes. It's grossly incomplete, but hopefully interesting and generative.

The strangest places in the periodic table

The physicist John Wheeler once stated a useful principle to guide research: “In any field, find the strangest thing and explore it”. One great thing about maps is that they suggest strange things. This is true even when the map itself is familiar, like the periodic table. We can ask ourselves: where are the strangest “places” in the periodic table? And then challenge ourselves to explore those places.

One candidate for the strangest place in the periodic table is the most recently discovered chemical element, oganesson. It's the heaviest known element, with an atomic number of 118. It's highlighted below with a red circle in the bottom right** Image adapted from images due to users “Double sharp” and “Offnfopt” on Wikipedia.:

Oganesson was discovered in 2002 by a team of scientists headed by Yuri Oganessian, after being predicted in 1895 by Hans Peter Jørgen Julius Thomsen. It was made by using calcium ions to bombard a target of californium atoms, at very high speed. Only a handful of oganesson atoms have ever been observed – around a half dozen, with several of those observations regarded as unconfirmed.

We don't know much for sure about oganesson – the handful of atoms observed decayed too quickly to get much idea of what oganesson does. Despite this, intensive theoretical studies have been done, and some surprising predictions made. Oganesson is in the final column of the periodic table, and these are of course the “noble gases”, highly non-reactive gases like helium and argon.

At least, that's the story we learn in high school chemistry. In fact, oganesson confounds these expectations. Theoretical calculations suggest oganesson is likely to be a solid semiconductor at standard temperature and pressure, not a gas. Furthermore, it may be significantly reactive, unlike the other noble gases** Clinton S. Nash, Atomic and Molecular Properties of Elements 112, 114, and 118 (2005). Note that the other noble gases are at least somewhat reactive (disodium helide, anyone?) – Wikipedia's discussion is a good place to start – but oganesson is predicted to be significantly moreso..

There is a standard argument for why noble gases are non-reactive gases – the gist is to understand the electron orbital shell structure, the number of electrons in each, and why full shells are particularly stable and lead to non-reactive elements. What you learn from oganesson is that some of the principles underlying that argument can't be quite right. I must admit, I haven't worked through the details of how the standard argument breaks down (beyond quickly skimming the above paper); nonetheless, it's extremely useful to have a little flag in my head saying that the standard argument about electron orbitals and noble gases actually breaks down for some elements. That argument is often presented as being based on universal principles, but those principles are not, in fact, universal.

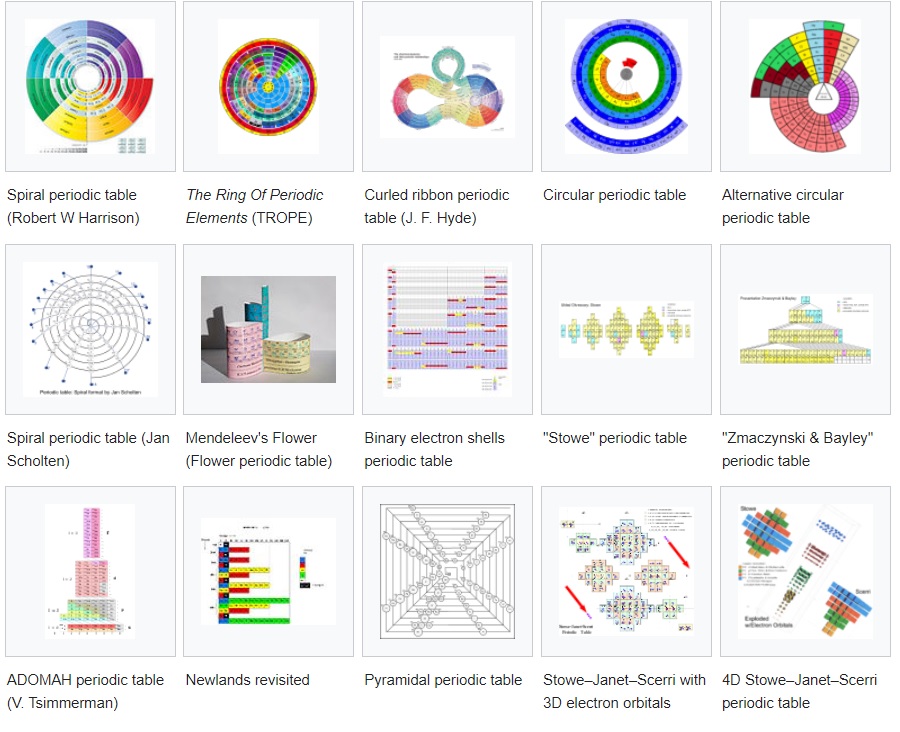

And so one benefit of exploring oganesson is that it improves other parts of your understanding of matter, even if only by informing you of limits to that understanding. And it pokes at you: the periodic table has the shape it does because of those underlying principles. If those principles break down, should the shape of the periodic table be changed? And that in turn suggests a different question, one about human psychology and understanding rather than the principles of nature: what are the best alternative ways of visualizing the elements? Are there better ways than the standard periodic table? Just for fun, let me show you a snapshot from Wikipedia's page of alternative periodic tables:

I have a biologist friend, Laura Deming, who has pointed out what she calls the biological flywheel** Laura Deming, Sequencing is the new microscope (2018). Incidentally, searching on “biology flywheel” also yields this stimulating essay on a different kind of flywheel, in synthetic biology.. The flywheel works like this. As we get better at biology, we discover more and more biological processes – all sorts of amazing proteins and other molecular nanomachines** Animation of a kinesin protein walking along a microtubule. By Wikipedia user “Jzp706” :

When we discover such a machine, and start to understand the principles it operates by, that in turn becomes part of humanity's toolkit for making more discoveries. It's a kind of bootstrap or flywheel generating more progress. Here's some examples, quoted in Deming's essay:

If you look at the most important techniques in biology, in the second half of the 1900s, they’re all driven by tools discovered in biology itself. Biologists aren’t just finding new things - they’re making their new tools from biological reagents. PCR (everything that drives PCR, apart from the heater/cooler which is 1600s thermodynamics, is either itself DNA or something made by DNA), DNA sequencing (sequencing by synthesis - we use cameras/electrical detection/CMOS chips as the output, but the hijacking the way the cell makes DNA proteins remains at the heart of the technique), cloning (we cut up DNA with proteins made from DNA, stick the DNA into bacteria so living organisms can make more copies of it for us), gene editing (CRISPR is obviously made from DNA and with RNA attached), ELISA (need the ability to detect fluorescence - optics - and process the signal, but antibodies lie at the heart of this principle), affinity chromatography (liquid chromatography arguably uses physical principles like steric hindrance, or charge, but those can be traced back to the 1800s - antibodies and cloning have revolutionized this technique), FACS uses the same charge principles that western blots do, but with the addition of antibodies.

… If you’d asked a physicist in the early 1900s how we’d figure out the complicated, tangled structure of DNA, he’d probably say to look at it with a microscope. Sequencing has become the new microscope. It’s easier for us to cross-link, fragment and sequence a full genome to figure out its 3-dimensional structure than it is for us to figure that out by looking at it head-on. We used to rely on photons and electrons bouncing off of biological sample to tell us what was going on down there. Now we’re asking biology directly - and often the information we get back comes through a natural biological reagent like DNA. Which we then sequence using motors made from the DNA itself!

Many recent examples of this biological flywheel may be seen in the development of the SARS-CoV-2 vaccines for Covid-19. Here's one: historically, vaccines have typically been developed by delivering inactivated fragments of the virus they protect against. The human immune system learns to recognize and destroy those fragments, and this provides protection against the underlying disease. But the messenger RNA (mRNA) vaccines for SAR-CoV-2 use a different approach. Instead of delivering inactivated virus, they simply deliver mRNA inside the body, and then rely on human cells to use the mRNA in vivo to manufacture the spike protein characteristic of the disease. In some sense, they're using the machinery inside the human body to eliminate one complex step in the vaccine manufacturing process. This greatly simplifies the design and manufacture of the vaccine.

There are similar flywheels all through science, and they drive a lot of discovery. One virtue of exploring the periodic table is that it's another example of such a flywheel. As you explore you unlock new substances and new understanding: the discovery of uranium helped lead to plutonium; that helped lead to curium; that helped lead to californium; which in turn played a role in the discovery of oganesson. It's a flywheel of exploration and discovery:

It's perhaps a little too tongue-in-cheek, but I think there's at least a grain of truth in saying much of science has really been about discovering these flywheels, a kind of flywheel flyweel.

Extending and escaping our maps

Let's come back to the map we were exploring, the periodic table. Another good question to ask is: “can we extend the map?” And going even further: “is there stuff that could never be on the map, even if we extend it?”

I'm going to mostly focus on the second question. The first question is, of course, a great question. There's many people working on making new elements, and lots of exciting questions. What are the ultimate limits of the periodic table? Is there some upper limit on the atomic number? There's disagreement on this question: according to Wikipedia, there are proposals that it ends at element number 128, 137, 146, 155, and 173.

It perhaps sounds strange that we have such a disagreement. In fact, it's in part a reflection of some flexibility in what we mean by atom. In some sense it's important that an atom be a reasonably stable, bound system. But stability is a matter of degree: even relatively common elements like uranium are unstable, decaying into other substances, like thorium. Indeed, if proton decay occurs, then in some sense all atoms are unstable.

And so the disagreement about the possible number of elements depends in part on how stable you require a system to be to qualify as an atom. I wouldn't be surprised if in the far future we can cram far more than 173 protons together into a nucleus-sized region; the only in-principle limit I can think of is fermi pressure. But such pseudo-atoms won't ordinarily be very stable (though perhaps we can find clever ways of stabilizing them)!

What about things which aren't on our map (the periodic table) at all? Can we get entirely off the periodic table?

Of course, we can. Indeed, depending on whereabouts you are in the universe, atoms may actually be a relatively uncommon (or unstable) form of matter.

For example, the sun is often described as being made up of hydrogen and helium, with trace amounts of other elements. That's true near the surface of the sun, which is at a temperature of about 6,000 Kelvin. But as you go inward the sun gets far hotter, eventually rising to millions Kelvin. That increasing temperature rips the hydrogen molecules and even the hydrogen atoms apart – they ionize, meaning that the single electron is ripped apart from the single proton. And so inside the core of the sun it's no longer really hydrogen atoms, but rather a giant sea of protons and electrons, largely unbound to one another (together with a lot of ionized helium, as well). In the core it doesn't make sense to say the sun is made of atoms.

Of course, we can get far more exotic. Inside a neutron star for the most part you won't find any atoms (though there are some ionized atoms near the surface). A neutron star is formed from the collapse of a supermassive star – so massive that the gravitational forces crush the electrons into the nucleus, where they merge with the protons to form neutrons. And you end up with an incredibly dense sea of neutrons and other, potentially more exotic, particles** Neutron stars may not just contain a sea of neutrons, but also other even stranger states of matter. A stimulating discussion may be found here. – a liter of neutron star has a mass of on the order of a hundred billion tonnes. If you somehow lived inside a neutron star you wouldn't think in terms of atoms at all. Rather, you'd need patterns made out of neutrons. I wonder if it's possible to do that in a stable way** The astronomer Frank Drake apparently proposed a nuclear “chemistry” in a 1973 interview (which I haven't read, and wasn't able to find online). The idea was later fleshed out into the science fiction novel “Dragon's Egg” by Robert Forward.? Less speculatively, we can take away that it's actually a provincial point of view, a kind of atomic chauvinism, to regard atoms as typical, “the fundamental constituents of matter” as so many of us are taught when growing up.

The flywheel of design imagination

So far, we've been concentrating mostly on types of matter found in nature, like neutron stars. But we can turn this around, and ask questions about design: what kinds of matter configuration can we stabilize in principle? In other words, instead of looking for naturally occurring substances, let's ask the question: what types of matter do the laws of physics enable us to design? In this designer's viewpoint you ask how you can engineer reality to fit what you want. And the emphasis isn't so much on the “engineer” as on “what you want”. Because it turns out that figuring out what is possible requires immense imagination.

This design point of view is very different than the naturalistic view of matter, where you continually explore what occurs “naturally”, with relatively minimal interventions. And this designerly point of view leads to very deep questions:

- What patterns of matter can we stabilize, in principle? What patterns cannot be stabilized, by any means?

- What patterns of matter and fields are possible, in principle? How can we create them? What patterns are impossible?

- Are there fundamental new design abstractions we can invent for matter? In some sense the notion of an atom (or the plasmas inside the sun, or the ribosome inside our cells) are design abstractions supplied by nature. Can we find new ones ourselves?

Even a very simple question like “What pattern of static electromagnetic fields can we create?” is actually a pretty great question. And then: can we build a universal device to create that field? And we can ask analogous questions for other types of matter and fields.

Many non-designers think of design as relatively unimportant. Once on Twitter an experienced programmer and manager made the following comment about design in the software realm:

Honestly, I have a hard time taking the idea of a “Designer” as a whole job seriously. Like you lay out buttons and text? That's it?

Now, what does it mean that (visual) design is “lay[ing] out buttons and text”? It seems a little like claiming that Shakespeare was merely laying out letters of the alphabet in a particularly well-chosen fashion. There is some sense in which that's true. But the point of design, as with Shakespeare and great writing, is to discover powerful higher-order ideas. Those ideas are then reflected in the layout. In fact, the very best designers invent amazing new abstractions. This is true in both the physical world and the software world. Consider Alan Kay** In A Conversation with Alan Kay (2004). on the importance of powerful design ideas in physical construction:

If you look at software today, through the lens of the history of engineering, it’s certainly engineering of a sort—but it’s the kind of engineering that people without the concept of the arch did. Most software today is very much like an Egyptian pyramid with millions of bricks piled on top of each other, with no structural integrity, but just done by brute force and thousands of slaves.

I would compare the Smalltalk stuff that we did in the ’70s with something like a Gothic cathedral. We had two ideas, really. One of them we got from Lisp: late binding. The other one was the idea of objects. Those gave us something a little bit like the arch, so we were able to make complex, seemingly large structures out of very little material, but I wouldn’t put us much past the engineering of 1,000 years ago.

Design at its best is all about the discovery of such powerful ideas, like the arch, late binding, software objects, and so on. I greatly admire Bret Victor's work, and one of the thing Victor does better than almost anybody is create new visual abstractions, abstractions made from pixels. To what extent can we do the same for matter?

Of course, at the level of everyday objects, this is a commonplace activity – obviously, we've had inventors and designers for millenia! But starting from a fundamental level – asking what design possibilities are latent within the laws of physics – is a much more recent development.

Personally, I first began to understand this with Alexei Kitaev's late 1990s invention of topological quantum computing** Alexei Y. Kitaev, Fault-tolerant quantum computation by anyons (1997).. Ordinarily, a quantum computer is a terribly fragile device – the quantum information can be destroyed by a single stray photon or atom. You need to use elaborate ways of stabilizing the quantum information, using ideas like quantum error-correcting codes.

Kitaev had a different idea. Speaking very roughly, he asked whether there might be a stable phase of matter that wanted to quantum compute. That is, in something like the same way that liquids like to flow, superconductors like to superconduct, Kitaev's phase of matter would be a material that naturally liked to quantum compute.

Simply posing the question – asking whether such a phase of matter is possible – is already an audacious act of imagination. But then Kitaev used a bundle of very clever ideas to design a relatively natural and plausible system which would form a phase of matter that naturally quantum computed.

Of course, this design-oriented approach isn't solely due to Kitaev. It can be seen in many other examples. You can see it in Frank Wilczek's invention of anyons and of time crystals, for instance** See, for example, Frank Wilczek, Quantum Mechanics of Fractional-Spin Particles (1982), and Frank Wilczaek, Quantum Time Crystals (2012).. And in the creation of negative index of refraction materials. And in many other places. Kitaev's idea is shockingly audacious and technically brilliant, but it's also merely part of a broader shift to a design point of view in the foundations of physics. Outside physics, this design point of view is of course already present in many parts of science – the synthesis of drugs by chemists is one example. And, of course, it's at the heart of much engineering and invention. What I'm emphasizing here, though, is the shift in viewpoint from design within some extant realm of available materials and tools, to design which takes as its starting point the fundamental laws of the universe.

When a certain type of person hears this they respond “you're talking about Phil Anderson's concept of emergence** P. W. Anderson, More is Different (1972).!” They're not wrong, exactly, but it's a shallow response which misses much of the point. I'm not (for the most part) talking about the kind of emergence that has been studied in the past in physics. That still adopts a naturalistic point of view, trying to understand the objects extant in the universe, or which can be made using relatively obvious interventions (e.g., discovering superfluids by lowering temperature).

Of course that does indeed turn out to be a good source of emergent phenomena, and has been the traditional approach of condensed matter physics and (much of) chemistry – things like superfluidity, superconductors, and many other striking emergent phenomena have been discovered that way. But the shift to a design point of view goes much further, to a place very different from how we have historically approached physics. It asserts that there are a large number of striking emergent phenomena latent inside fundamental laws, and that many of those cannot be discovered merely by exploring the natural world, and asking what materials there are; nor through obvious interventions like lowering the temperature. Rather, they require tremendous design imagination to invent. Inventing topological quantum computing was one such example: although certainly inspired by past naturalistic discoveries, like the fractional quantum Hall effect, it also had an enormous imaginative component.

Put another way: the conventional use of “emergent” has a rather passive flavour. What I'm talking about is a much more active imaginative stance, the kind of stance taken by people like Bret Victor or Satoshi or Picasso.

Over the long term, I believe there's a fair chance physics will end up principally as a design science: really about developing new levels of abstraction in the physical world. People like Kitaev, Wilczek, and even John von Neumann (with his work on cellular automata, universal constructors, and self-replication) will all look to have been working on taking first steps up from the fundamental laws. They're understanding what qualities it is possible to build into matter, understanding if there are composable abstractions, and so on.

This shift of point of view changes our relationship to matter to what Herb Simon called the “sciences of the artificial”. I believe that retrospectively it'll be viewed as one of the most important shifts in science in the 20th and 21st centuries.

As I write this, I realize I'm struggling to clearly articulate what I believe design is really all about, or why it matters. At the cost of some repetition, let me take another shot.

Part of the trouble is that the word “design” is often used to mean essentially “making things pretty” or “making things useable”.

Those are valuable endeavours, but they're not what I mean.

At its deepest, design is about inventing entirely new types of objects and of actions. It's the type of thinking that results in someone conjuring up the rules of chess; or inventing topological quantum computing; or in developing the polymerase chain reaction. In each case we take the rules of reality and find latent within them some other very different set of rules, a set of rules for a new reality, one that generates beautiful patterns of its own.

I first got seriously interested in design after seeing Bret Victor's work in 2012, and thinking “I want to do that”. But also, at the same time: “what is he actually doing?” I then spent many years trying to understand how designers invent powerful new objects and actions and abstractions** A partial summary of my thinking may be found in Thought as a Technology (2016).. It was far more difficult than I anticipated; I now believe it's one of the hardest and deepest things human beings do, and currently only a handful of people in the world are really good at it. Furthermore, it's a skill almost entirely different from the skills scientists usually learn** There is, however, some considerable overlap with mathematics, especially the art of finding fundamental new mathematical definitions..

Are powerful design ideas invented or created? Certainly, they're not arbitrary. They're much more like mathematics: we have a set of basic rules, and can then discover powerful patterns latent in those rules. In that sense we may call it Plato's design flywheel, or the flywheel of design imagination** I realize I'm leaning heavily on the flywheel analogy in these notes – flywheels here, flywheels there, flywheels everywhere. It's perhaps a geographic hazard – I live in Silicon Valley, where many entrepreneurs and investors are obsessed with finding flywheels to generate growth. Still, the discovery flywheel is a genuine pattern in science, and a useful pattern to be on the lookout for. But it's still just one heuristic for how discovery works, and there are many other powerful heuristics.: we start with the basic laws governing matter, and explore around them, eventually developing powerful new principles. Those, in turns, open up new worlds to explore. And, in my opinion, it's quite likely that will happen in an open-ended fashion, an endless frontier of new worlds.

Phylogenetic trees and the shape of biology

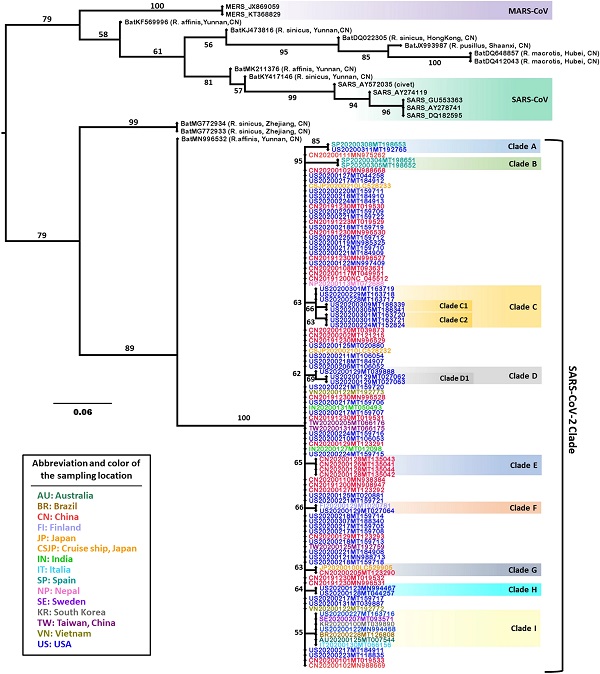

Let's take a look at a very different type of map, the phylogenetic tree or phylogeny. You've perhaps seen phylogenies in relation to the coronaviruses, including the SARS-CoV-2 virus which caused the Covid-19 pandemic** Image from Li, Liu, Yang et al, Phylogenetic supertree reveals detailed evolution of SARS-CoV-2 (2020) (license):

Such trees show the evolution of a genome over time** I'm speaking loosely here. As I understand it, the nodes in phylogenies are typically at the species (or higher) level – they don't usually represent single genomes.. In some sense, all life on earth can likely be put into a single giant phylogenetic tree, going all the way back to the beginning of life** There are of course some caveats that may complicate the tree structure, e.g.: (1) if life originated multiple times on earth; and (2) horizontal gene transfer (and related ideas like endosymbiosis).. It'd be a fun project to visualize as much of that as possible – you can imagine an online viewer that would let you zoom in and out, view at multiple levels of abstraction, and extend and revise the phylogeny, as appropriate.

Phylogenies are useful for many reasons. In the context of SARS-CoV-2, epidemiologists can use them to keep track of the way the virus is changing and spreading. For instance, an article by da Silva Filipe et al** Ana da Silva Filip et al, Genomic epidemiology reveals multiple introductions of SARS-CoV-2 from mainland Europe into Scotland (2020). used a combination of phylogeny and epidemiology to estimate that SARS-CoV-2 was introduced to Scotland on at least 283 separate occasions, during February and March 2020, and that the introductions came from mainland Europe (not China). In a sense, phylogeny is a kind of macroscope, letting you see the whole Covid pandemic** The term macroscope comes from a remarkable book by Joël de Rosnay, The Macroscope (1979).. That macroscope motivates you to ask questions about how the structure of the virus (and of the pandemic) has changed; that, in turn, helps suggest and perhaps answer other important questions: are vaccines as efficacious against all variants; do we need additional booster shots; and so on. Phylogenies are a way of seeing.

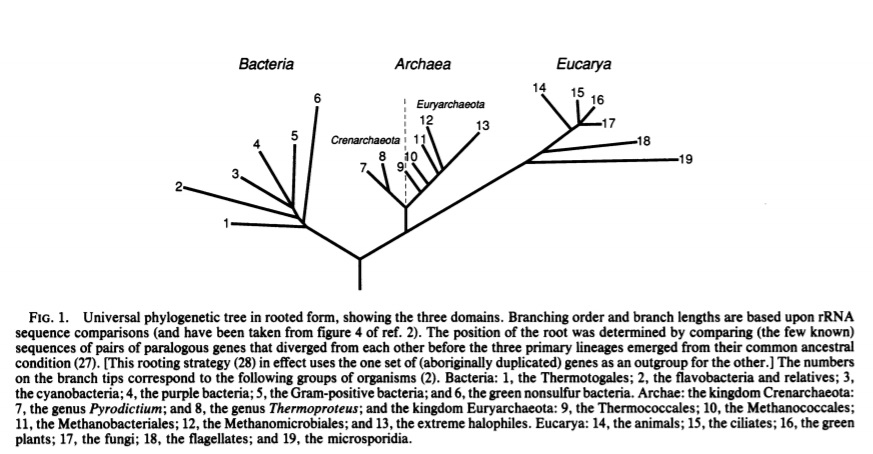

Phylogenetic trees have been used to do even more interesting things. Here's one of the most famous phylogenies ever:

It's out of a famous 1990 paper by Woese, Kandler, and Wheelis** Carl R. Woese, Otto Kandler, and Mark L. Wheelis, Towards a natural system of organisms: Proposal for the domains Archaea, Bacteria, and Eucarya (1990). That paper built on earlier work by Woese and collaborators, e.g., Carl R. Woese and George E. Fox, Phylogenetic structure of the prokaryotic domain: The primary kingdoms (1977).. Prior to that paper, biologists divided life into two broad classes: the prokaryotes and the eukaryotes. Historically, the eukaryotes have usually been defined as organisms whose germ line cells have a nucleus, while prokaryotes do not. What Woese et al did was point out that a better classification is possible. They went down to the molecular level, and used molecular evidence to make inferences about the phylogeny. The quote below captures the flavour of their argument – you don't need to understand all the details, the point is that they're using molecular reasoning to make broad inferences about the structure of the tree of life.

[Note that what Woese et al call eubacteria we today call bacteria, and what they call archaebacteria we today call archaea. Indeed, today's terminology is suggested later in this paper.]

It is only on the molecular level that we see the living world divide into three distinct primary groups. For every well-characterized molecular system there exists a characteristic eubacterial, archaebacterial, and eukaryotic version, which all members of each group share. Ribosomal RNAs provide an excellent example (in part because they have been so thoroughly studied). One structural feature in the small subunit rRNA by which the eubacteria can be distinguished from archaebacteria and eukaryotes is the hairpin loop lying between positions 500 and 545 (18), which has a side bulge protruding from the stalk of the structure. In all eubacterial cases (over 400 known) the side bulge comprises six nucleotides (of a characteristic composition), and it protrudes from the "upstream" strand of the stalk between the fifth and sixth base pair. In both archaebacteria and eukaryotes, however, the corresponding bulge comprises seven nucleotides (of a different characteristic composition), and it protrudes from the stalk between the sixth and seventh pair (18, 19). The small subunit rRNA of eukaryotes, on the other hand, is readily identified by the region between positions 585 and 655 (E. coli numbering), because both prokaryotic groups exhibit a common characteristic structure here that is never seen in eukaryotes (18, 19). Finally, archaebacterial 16S rRNAs are readily identified by the unique structure they show in the region between positions 180 and 197 or that between positions 405 and 498 (18, 19). Many other examples of group-invariant rRNA characteristics exist; see refs. 2, 18, and 19.

It's shocking we missed archaea for so long. It's almost embarrassing: we thought the bacteria and archaea were largely of the same kind, when it turns out they're shockingly different from one another. It's a little like being an explorer and mistakenly thinking the Americas and East Asia are the same place. If you thought that it would cause you to miss an awful lot, to put it mildly.

Woese et al put it this way:

As the molecular and cytological understanding of cells deepened at a very rapid pace, beginning in the 1950s, it became feasible in principle to define prokaryotes positively, on the basis of shared molecular characteristics. However, since molecular biologists elected to work largely in a few model systems, which were taken to be representative, the comparative perspective necessary to do this successfully was lacking. By default, Escherichia coli came to be considered typical of prokaryotes, without recognition of the underlying faulty assumption that prokaryotes are monophyletic. This presumption was then formalized in the proposal that there be two primary kingdoms: Procaryotae and Eucaryotae (15, 16). It took the discovery of the archaebacteria to reveal the enormity of this mistake.

Implicit in all this is the claim that phylogeny is a better approach to classification (or definition) than structure or morphology. In what sense is this true? After all, classification on the basis of structure makes sense: whether a cell has a nucleus or not is a very important and interesting question. But the phylogenetic question is more fundamental. For one thing, if you know which part of the phylogenetic tree you're on you can answer the question about the nucleus. Structure is a consequence of phylogeny, not the other way around. And so this approach to definition and classification is deeper than the old prokaryote / eukaryote distinction.

Phylogeny also helps explain in what way biology is different from “ordinary” matter. In some sense it's strange that we treat biology as a subject apart, considering biological systems separately as a special form of matter. Of course, if we could put carbon, hydrogen, and oxygen etc atoms together in just the right way, we could just make a lifeform. It doesn't in principle matter whether this is done through reproduction or by using (say) a kind of super-STM to painstakingly assemble the atoms.

The answer lies in phylogeny and the tree of life. If you were to draw the analogue of a phylogenetic tree for a rock, it would be uninteresting, a single node, with no ancestors:

It's the selection (and variational) pressures acting on the phylogenetic tree which separate biology from other matter. You can view the complete phylogenetic tree – the tree of life – as a way of boldly exploring the space of matter. It's not the complete space, but it is a very large and interesting space** Indeed, there's some sense in which whatever human beings ultimately make is a product of the phylogeny, although it would be a stretch to say it should appear on the tree of life. Insofar as human beings obtain universal control over matter, the tree of life may be (loosely) regarded as exploring the entire space of matter.. Do this kind of exploration for a few billion years and you can explore a lot!

This is also the origin of the biological flywheel: evolution has spent a few billion years creating a giant tree of life. We now get to reverse engineer this enormous reservoir of interesting devices that biology has created, effectively using the tree of life as a source of ideas and principles for understanding matter. One way of viewing biology is that it's the search for interesting structure inside the phylogeny – it's like discovering a gold mine of extant great ideas about matter.

Analogously, imagine that instead of inventing computers ourselves we first learned about computers through contact with an alien civilization. Perhaps the alien civilization is extinct, but in their archaeological ruins we found the equivalent of a trillion trillion lines of code in their programming languages (or whatever they use to control their computers). Computer science for us would become an archaeological study, an exploratory dig through the alien code, understanding small ideas at first, and then gradually pulling deeper ideas out.

Indeed, modern computer programming is beginning to have this flavour: programmers today are in contact with a gigantic body of open code through services such as GitHub, StackOverflow, and so on, not to mention any private codebase they may have access to. The science fiction author Vernor Vinge has written of a far future where programmer-archeologist is the most important occupation, the ability to extract meaning from the vast corpus of extant code:

Programming went back to the beginning of time… So behind all the top-level interfaces was layer under layer of support. Some of that software had been designed for wildly different situations. Every so often, the inconsistencies caused fatal accidents. Despite the romance of spaceflight, the most common accidents were simply caused by ancient, misused programs finally getting their revenge… “The word for all this is ‘mature programming environment.’ Basically, when hardware performance has been pushed to its final limit, and programmers have had several centuries to code, you reach a point where there is far more significant code than can be rationalized. The best you can do is understand the overall layering, and know how to search for the oddball tool that may come in handy

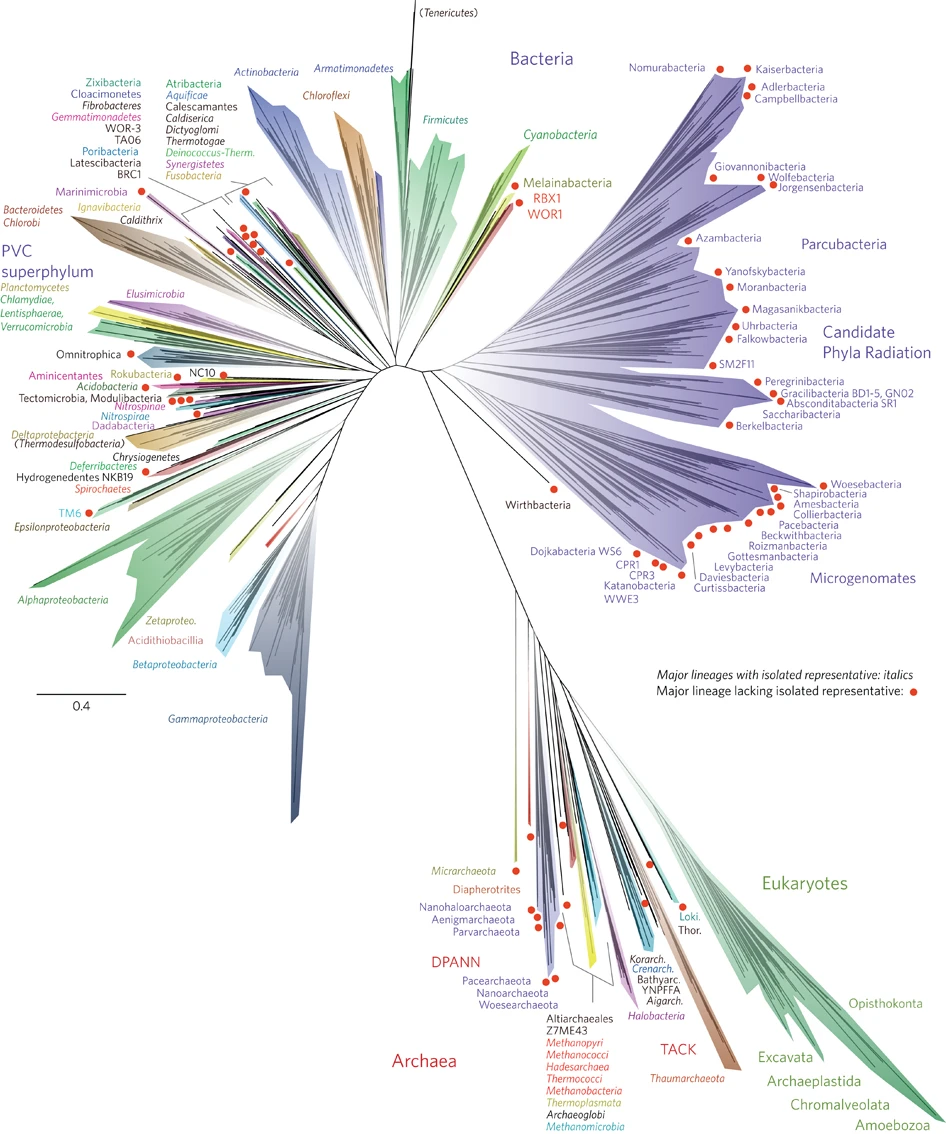

Returning to phylogeny, the Woesian revolution is merely part of an ongoing story. Here's a depiction of the tree of life from 2015** Laura Hug et al, A new view of the tree of life (2016). Via Noah Olsman.. It's based on genomic sequences from roughly 30,000 different species – itself a tiny fraction of all the species on earth.

One striking thing about this diagram: it doesn't show three domains of life, but rather two, with the archaea and eukaryotes together. It's an ongoing problem to decide whether to use two domains (the eocyte hypothesis) or the Woesian three-domain system. The classification above lends support to the eocyte hypothesis, although the authors caution:

The two-domain Eocyte tree and the three-domain tree are competing hypotheses for the origin of Eukarya; further analyses to resolve these and other deep relationships will be strengthened with the availability of genomes for a greater diversity of organisms.

One beautiful thing in this phylogeny: humanity barely shows up. We humans are, in fact, inside the opisthokont clade, itself a single point in the bottom right. That single point includes not just human beings, but all animals, and even the fungi (which are more closely related to animals than they are to plants). In the tree of life, the 100 or so billion human beings who have ever lived are barely visible. Carl Sagan has spoken movingly of the “pale blue dot”, emphasizing the smallness of earth and humanity when viewed in cosmological terms, “a lonely speck in the great enveloping cosmic dark”. We humans are, equally well, a pale point of genetic possibility, a tiny leaf on the immense tree of life.

In what sense is any of this fundamental?

I began these notes by talking about the supposed end of physics, and making a case that we're initiating a new phase in physics (and science), one based on an ongoing discovery of qualitatively new possibilities latent in matter, based on the fundamental laws of physics.

The obvious skeptical response to that claim is to say that while this is all certainly interesting, it isn't as fundamental as the search for the basic particles and forces.

I used to believe that. But over time I've gradually come to think it's wrong. The reasons are somewhat complicated, and not reducible to any single example. Rather, it was exposure to a whole variety of different examples.

One example is public key cryptography, a beautiful set of cryptographic ideas discovered in the 1960s and 1970s. These ideas may be viewed as in some sense merely “applications” of Turing's basic theory of computing. But the ideas are so self-contained and elegant that it seems to me rather a fundamental new field. Yes, it's grounded in Turing's theory, but it has its own internal logic.

In the TV shows Dr. Who, the central character, the Doctor, lives and works inside the TARDIS, a device which superficially resembles a 1960s London police box** Due to user zir (zir.com) on Wikipedia.:

In fact, the TARDIS is really a machine able to bend time and space. And among its many qualities, it's “bigger on the inside than on the outside”** I must admire the Dr. Who scriptwriters' ability to save money on exterior location shots, incidentally coming up with a beautifully generative driver of clever plot points. . And so the interior contains all sorts of control rooms, bedrooms, even a swimming pool.

Science works surprisingly similarly. Apparently tiny innocuous details sometimes open up to yield beautiful, broadly applicable principles. For instance, the CRISPR technology for programming biology came in part out of work on how to manufacture yoghurt more reliably.

Furthermore, those beautiful broad principles may be partially decoupled from the underlying laws out of which they emerged. There are many possible universes, with physics very different from our own, in which the laws of public key cryptography** Though curiously, it's not yet clear how well public key cryptography works in our universe. Quantum computers are likely to break the most widely-used public key cryptosystems; classical computers are often regarded as unlikely to be able to do such a break (though it's never been proven). In this sense it's likely there are other laws of physics under which public key cryptography works better than in our universe. and of CRISPR hold. In this sense the laws of physics may actually be less important than we think, a relatively unimportant substrate, much like it matters less which computer you are using than which operating system.

Another test of this point of view comes from biology. It's true that biology contains many deep ideas – it's difficult not to be awed by the way DNA replicates, or the way a ribosome or CRISPR works. But it's tempting to retort “oh, but biology is just something local to the earth, and so in some sense is provincial. It's not like physics or chemistry or mathematics or even computer science, which are much more universal”.

Again: that was something I believed for a long time. But it's also wrong. Insofar as there are beautiful principles behind biology – ideas like evolution by natural selection, or the many amazing mechanisms inside the cell – those principles are true (and amazing) everywhere in the universe. It doesn't matter if, say, evolution by natural selection isn't actively operational in some particular locale. It's still an absolutely remarkable principle that is true everywhere.

To know the fundamental laws of nature without understanding the extraordinary principles hidden within them seems lame. It would be like knowing the rules of chess – the basic moves which the pieces may make – without understanding any of the higher-order principles which govern the game. It's in the latter, in both chess and science, that the most interest lies. And that is particularly true of those higher-order principles which are in considerable degree decoupled from the underlying laws.

Summary map

Let's look at a few of the maps we've been examining:

Some pieces of this were only implicit in the earlier discussion. In particular, four dichotomies underlay much of the discussion: between stable and unstable matter; between equilibrium and non-equilibrium matter; between biological and non-biological matter; and between designer and naturalistic matter** I can't help but ponder the management consulting question: what happens when you make up two-by-twos from these? I've done so, and then tried putting different types of matter in different quadrants. The results are strangely compelling, though I'm not yet quite sure what to make of them..

It's striking the extent to which different sides of these dichotomies involve different kinds of expertise. And that being expert on one often means not being very good at others. For instance, many physicists are terrific at thinking about equilibrium matter, but no good at thinking about design. And so they focus on equilibrium, and don't think about design.

More broadly, this is all still very much a bespoke patchwork. We think of all these subjects as quite different: an expert on CRISPR might well say “oh, I don't know anything about nuclear reactions”. And yet both a nuclear physicist and a CRISPR expert are in some sense “just” moving protons, neutrons, and electrons around. Are there unifying abstractions which can be used to help merge some of these superficially different fields?

Part of the trouble, in my opinion, is that we don't have a single universal platform for manipulating matter. This is a beautiful thing about computing: we have a single platform (the Turing machine) which can be used to implement any computational process. But CRISPR, for instance, is very different from the kind of colliders used to make oganesson. Think about that latter process: to make oganesson we collide just the right atoms at just the right energies. And we have to hope for quite a bit of luck. It's a thoroughly bespoke process. And to get a genuine universal constructor for matter you need a device which can do not just that, but which can also make black holes and neutron stars; which can do everything CRISPR can do; and so on. It's a tall order.

Still, that's the arc of science. We're gradually developing machines with ever greater degrees of universality. Tools like CRISPR and bioreactors are pretty great steps in this direction, for instance. And people like John von Neumann, Eric Drexler, and David Deutsch have all considered variations on the idea of universal assemblers. Can we build such universal construction devices, so we can put everything on a single footing, no longer taking the bespoke approach? Can we make it so matter not only has affordances, it has a universal set of affordances? Is it possible to develop interfaces between what currently appear to be separate fields? What's your CRISPR-to-graphene API? There's a tension too: as we develop more general-purpose tools like CRISPR and bioreactors we in some sense unify previous methods for making matter. But we also open up new worlds.

Still, developing more universal methods of construction is only a small part of the overall problem. What qualities are possible in matter? And what are the most striking higher-level principles, unifying abstractions which can be used to help merge some of these superficially different fields?

In software engineering there is an observation known as Conway's Law** Melvin E. Conway, How Do Committees Invent? (1967)., applicable to organizations that design systems:

Any organization that designs a system... will inevitably produce a design whose structure is a copy of the organization's communication structure.

Elsewhere, I have argued that there is a kind of scientific analogue of Conway's Law** Michael Nielsen, Neural Networks and Deep Learning (Chapter 6) (2015).:

... fields start out monolithic, with just a few deep ideas. Early experts can master all those ideas. But as time passes that monolithic character changes. We discover many deep new ideas, too many for any one person to really master. As a result, the social structure of the field re-organizes and divides around those ideas. Instead of a monolith, we have fields within fields within fields, a complex, recursive, self-referential social structure, whose organization mirrors the connections between our deepest insights. And so the structure of our knowledge shapes the social organization of science. But that social shape in turn constrains and helps determine what we can discover. This is the scientific analogue of Conway's law.

You see this in the fact that our maps of matter are not just maps of nature; they're also maps of the human social organization of science. You see this, for instance, in the periodic table: there are scientific groups which are experts on particular columns of the periodic table, say, the halogens. This social division of labour reflects an underlying principle in nature. Certain principles govern the halogens which aren't operable for other elements; the groups can leverage those principles to develop a particular type of expertise. In a similar way, the tree of life reflects both nature and the human social organization of science: the principles which separate (say) plants on the tree of life are also leverage points for a division of labour.

Every once in a while, of course, we re-organize these maps as we change our understanding of the underlying principles. When Woese and collaborators understood the importance of archaea, that triggered a re-organization of biology into the three-domain system; that conceptual re-organization in turn triggered a re-organization (still partial and ongoing) of the social systems of biology.

Further complicating this picture is a tension between naturalistic discovery and imaginative design. This is perhaps most easily illustrated through my story of discovering computing through an alien civilization's computer code. At first all the action in computing would be among the archaeologists exploring the code. It'd be a golden age of discovery. Quite likely there would be tension between the archaeologists and the hackers trying to use those ideas to build their own systems. The hackers would mostly be (very slowly) re-discovering things already done much more effectively inside the alien codebase. It'd no doubt seem rather pedestrian to the archaeologists.

That's a fanciful story. But in fact there's a similar tension between naturalistic discovery and imaginative design in many existing sciences. Right now in many fields many of the deepest ideas are coming from the naturalistic side. But over the long run, my bet is on human imagination, and that of our descendants.

Acknowledgements

Thanks to Laura Deming, Adam Marblestone, and Kanjun Qiu for helpful conversations.